UTStatsDB is a player and match statistics system for Unreal Tournament 99, 2003, 2004 and 3, which parses match logs generated by each game (sometimes requiring additional server-side mutators), and makes stats for each game available through a website.

The stats are also aggregated by player, map and server, allowing you to browse and analyse quite a number of in-depth stats for each.

The project was developed and maintained by Patrick Contreras and Paul Gallier between 2002 and around 2009, where the original project seems to have been abandoned some time after the release of UT3. (addendum: by some coincidence, after 9 years of inactivity, the original author did create a release a few days after my revival/release) Locating downloads (the download page is/was not working) or the source (their SCM system seems to require auth or is simply gone) was quite troublesome.

Thankfully it was released under GPL v2, so I’ve taken it upon myself to be this project’s curator (addendum: since the original author also made a new release, I may now need to look into a rename or major version bump), and have since released two new versions, 3.08 and 3.09 which focus firstly on getting PHP support up to scratch so it runs without issue on PHP 7+, as well as implementing PHP’s PDO database abstraction layer for DB access, rather than using each of the supported DB drivers (MySQL, MSSQL, SQLite) directly.

In addition to many other bug fixes and issues, I’ve thus far revised the presentation significantly, provided Docker support, improved performance of several SQL operations by implementing caching and better queries, etc.

UTStatsDB can be found on GitHub, where the the latest release can also be downloaded.

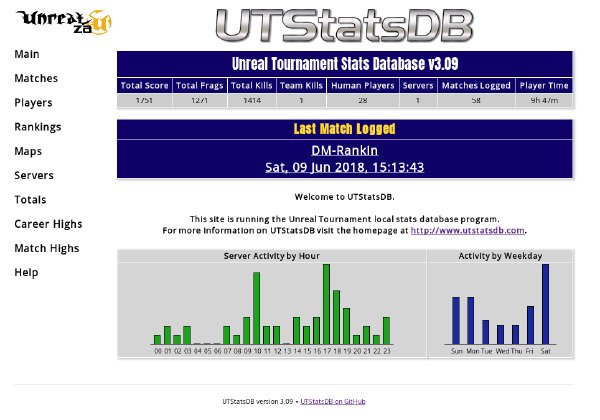

A live example of UTStatsDB in action can be found at the UnrealZA stats site.